Fixing security bugs

Fixing security bugs

This article covers some ways I’ve gotten security bugs fixed inside a company.

Finding bugs is a technical problem, fixing them is a human problem.

Hacking: Exciting.

Finding bugs: Exciting.

Fixing those bugs: Not exciting.

The thing is, the finish line for our job in security is getting bugs fixed¹, not just found and filed. Doing this effectively is not a technology problem. It is a communications, organizational² and psychology problem.

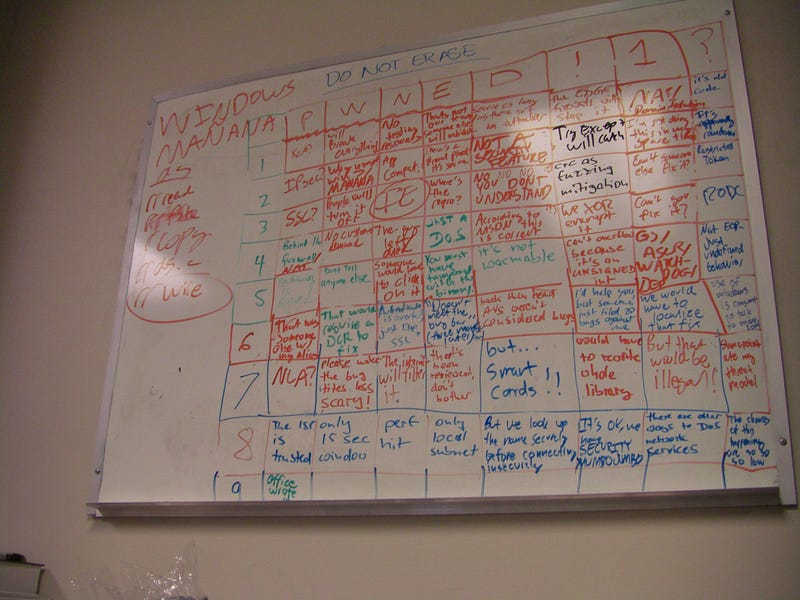

A decade ago on the Microsoft vista pentest we³ found some bugs. Then as we worked to get those bugs fixed we got a lot of excuses back: “but that would be illegal”, “just a denial of service”, “ but its a perf hit”, “but the victim would have to click on it”. This happened enough times that a bingo board of the excuses took shape:

The point is it is sometimes a hassle to get security bugs fixed and this isn’t a new problem. It is common for critical security flaws are fixed quickly but the long tail of lower-priority issues (that should still be fixed!) to drag on.

Over the years I’ve gotten a lot better at getting these type of issues fixed.

Things that worked for me:

- Is prioritization correct? Bad security teams think everything is critical, in which case nothing is. Everything in security, engineering, and life is tradeoffs. Don’t push for something that doesn’t matter. Preserve your credibility by not causing security fatigue for engineering teams.

- Is the bug clear? All security bugs should cover:

* Impact.

* How the issue works.

* Clear next steps. Either a fix or what to investigate next.

* Why you assigned it to that person.

* What your expectation is for the assignee.

Jack, Philippe and Stephen are all examples of clearly communicating complicated security issues. - Explain why security matters. I believe all security tasks should link to something explaining why security matters, how fixing discrete bugs is part of that and a clear-eyed description of what might happen if it doesn’t get fixed. Doing this well you draw the reader into the world and mission of the security team.

- Empathize. Can you imagine the stress of getting a critical security bug in your code? The stress is multiplied through a murky and accusatory bug report demanding swift action. That is again multiplied when English is not your first language. Show empathy through clear, concise, non-emotional bug reports free of FUD. Empathy also means using the right medium for the person: email, slack, IRC, note on a diff or just showing up at their desk. Respecting different people’s workflows matters.

- Nagging or guilting engineers into fixing stuff is miserable for both sides. If you go down this path consider carefully the “obvious” solution of automating it via a bot that pings tasks. I’ve had good luck with it but only when done carefully. Sometimes it really is as simple as reminding someone a few times and they get to work on it.

- Escalating to a manager. Not ideal but not as “bad” as it feels to most people. Often the manager has the most context on priorities and who has free time on their team. Good to ensure email has more the tone of “I want to make sure you are aware of this and I would like it to be fixed” not “engineer $foo has dropped this task n times”.

- Fixing them yourself is great sometimes, non-optimal most times. Any security team should be capable of this but it but exercised sparingly. Doing the work feeds the lesson back into the people its most relevant to, and needed for.

- A/B test wording. “you are in the small minority of people who have not fixed your open security bug” is a line that works wonders. I stole this wording from an excellent planet money episode about a/b testing the best words to get people to pay their taxes in Italy. It works for our field too.

- Visualization, leaderboards, gamification. I’ve seen security debt graphs to nudge senior leaders to invest in security work, leaderboards to praise the teams fixing their bugs the fastest and many types of gamification “fix 10 bugs, get a t-shirt!”. All can work or fail depending on the composition of your engineering org and values of your company. Kelly pointed me to this whole area of social proof and it sounds promising but I’ve personally only tried the above.

- Not a tactic but an observation: Sometimes you can actually see the 5 stages of grief happen with a security bug: Anger → Denial → Bargaining → Depression → Acceptance. It can be an emotional thing to be told your code put the company and its users at risk and I’ve seen this happen many times: first teams are mad, then try to argue down the severity, then slow to fix then finally thank us for improving their code. Not much to do here except to recognize there is some emotion with this and try to not take it personally.

- Cupcakes. All else fails try cupcakes. Upon the 1 year birthday of bugs we delivered cupcakes to the desks of 30 or so engineers who had not fixed longstanding bugs assigned to them. About a third of them had their issues fixed within the next two weeks. SUCCESS.

Conclusion

I’ve seen many exasperated security people lean too heavily on the moral component of fixing security bugs (its the right thing to do, how could you not!) and be surprised when that plea doesn’t resonate.

Security is an inherently cross-functional discipline. We have to work across many teams of varying qualities and enthusiasm for security. The social engineering to getting bugs fixed can be complex so hopefully these notes help.

Footnotes

1. Not just found and fixed but prevented for next time, eradicated across the codebase, well-understood by the company. I am using “fixed” as shorthand.

2. This all assumes you work at a company that cares about security and has the appetite to fix things but just isn’t getting it done. I’ve written about this with more color around how to start from zero here.

3. I played a small part here finding a few bugs and mostly heard this story from others after walking past this board.