Outcomes > bugs

Outcomes > bugs

A reasonable mission for an application security team is to find and fix security bugs in a codebase. I held this view at one point and I now think this is subtly wrong and instead we actually care about outcomes, not bugs.

A bug is a discrete flaw in software.

An outcome is a bug + how it was used. How did the story of the bug end? Did it lead to a breach or was it snuffed out early in development. Similar to medicine doctors ultimately care about the outcome of a patient, the type of cancer and how early it was found are footnotes to that story.

For example: an RCE bug that no one has found is less important than an XSS that is being actively exploited to harm users.

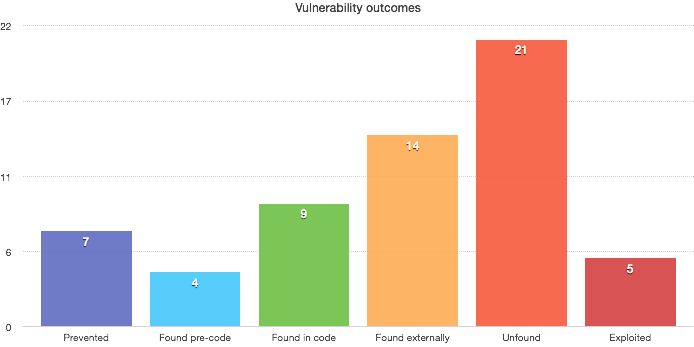

Imagine we have perfect knowledge of all the bugs in existence in our codebase¹, the breakdown might look like:

From this our goal is clear: Shift left².

The more bug outcomes that land in leftmost buckets the better for security.

We can view all security efforts through this lens.

- Static analysis run at checkin time moves a bug that would be found externally to found internally.

- Design reviews catch bugs before they become code.

- A bug bounty program provides a pressure release for bugs that might otherwise be exploited.

- Good security training moves bugs into prevented

None of these ideas are new, the SDL and manufacturing are all about surfacing flaws earlier in the process. Its worth explicitly calling out — most bugs will be found in these latter stages once software is shipped because that is the stage most software spends most of its life in: Shipped and running. This does not mean you suck at security.

Our goal isn’t the impossible one of building flawless software, it is to strike the right balance of risk vs effort. Outcomes are the best proxy I’ve found to model this for my teams.

Next article — lessons learned from using this model to drive product security work.

Footnotes

- This is of course sadly impossible.

- This is not novel, sdlc, manufacturing, etc all talk about this